Quick Start Guide

- Crawl your site using Screaming Frog.

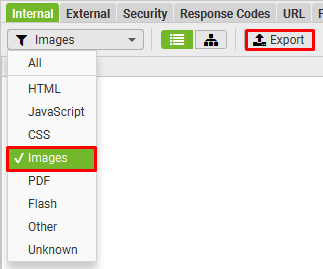

- Export all images by selecting Images from the drop down under the Internal tab. (Make sure to export as a .csv not an .xlsx file).

- Setup the Cloud Vision API and download the JSON file. (Instructions here:

- Update the Constants section to point to the Google Vision JSON file and the source image .csv file.

- Set a location and file name for the save file.

- Download the script here.

- Run the script!

Please note: Some basic knowledge of Python is required to get this up and running. e.g. how to install and run scripts.

The Benefits of Higher Resolution Images

Increased Conversions – High-quality images can significantly enhance the effectiveness of both paid and organic marketing efforts, leading to higher conversion rates.

Google Image Search Traffic – High-resolution images can driving additional traffic to your site from Google Image Search,

Reduce Returns – Higher resolution images can help to reduce returns by showcasing the product in as much detail as possible.

User Experience – Websites with high-quality images are perceived as more professional and trustworthy, enhancing the overall user experience, and potentially leading to longer site engagement.

User Configurable Options

GOOGLE_APPLICATION_CREDENTIALS_PATH – File path for Google Cloud service account key.

INPUT_FILE_PATH – Directory path to the input CSV file.

OUTPUT_FILE_PATH – Directory path for the output CSV file.

REQUEST_TIMEOUT – Maximum duration for HTTP request completion.

MIN_RESOLUTION – Skip source images equal or over a set resolution.

MAX_IMAGES – Maximum number of image suggestions.

SKIPPED_FILE_TYPES – List of image file extensions to be ignored.

Image Scraping Process

Because images are sourced from around the web, different Web servers have different thresholds for scraping. Because of this the script tries to be as stealthy as possible when downloading images. In addition to rotating User Agents, it uses three different methods to retrieve high resolutions images.

Attempt 1: Requests

Once a list of target URLs are found to source higher resolution images from, the script starts out by using the requests python library. Requests is the fastest way to retrieve the data we need, but also the most commonly blocked.

Attempt 2: PyPpeteer

Any images that failed to download (403 error) are then passed to PyPpeteer for a second attempt.

Attempt 3: Selenium

If PyPpeteer fails, then we have one last hope – Selenium. Selenium is resource heavy, but mimics a human in the browser better than the other two libraries. This is usually enough to finally get the image.

You could go even further with this script to employ the use of proxy servers if you want the most complete result set available which I’ll leave an exercise for the reader.

Contact Me

If you need help modifying or deploying this to your organisation, drop me a message via the contact form and I’ll get straight back to you.